AI isn’t new – it’s been around since the mid-20th century. But for decades, it felt more like science fiction than something that touched our daily lives. Even when Deep Blue beat chess champion Garry Kasparov in 1997, it was impressive but still distant. Then came ChatGPT. Hitting 100 million users in just two months, it didn’t just make waves – it made AI a part of our everyday world.

So, what’s the secret sauce behind ChatGPT?

A big part of its magic comes from the Transformer architecture. Back in 2017, a paper called “Attention is All You Need” hit the scene. It was only 15 pages long, but it completely changed the game in AI, especially for Large Language Model (LLM). This was the paper that introduced the Transformer architecture. In this article, we’re going to break the Transformer architecture down in simple terms and explore why it was such a game-changer.

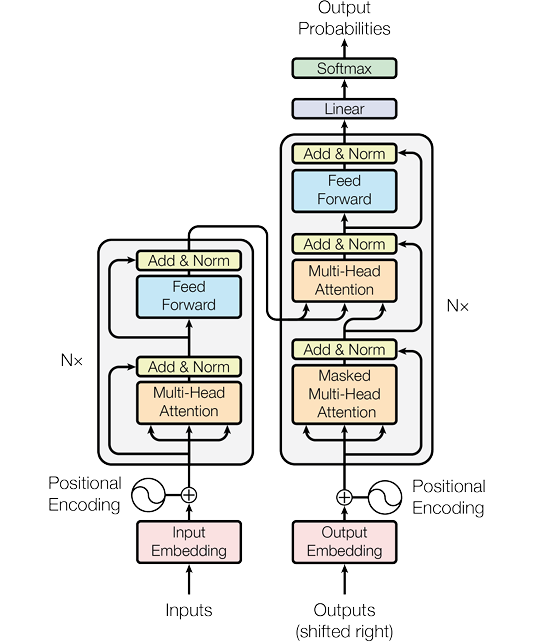

Before we use our language to simplify the Transformer architecture, let’s take a look at the original version.

In section 3 of the paper, it describes the model architecture as below:

“Most competitive neural sequence transduction models have an encoder-decoder structure [5, 2, 35]. Here, the encoder maps an input sequence of symbol representations (x1,…,xn) to a sequence of continuous representations z = (z1,…,zn). Given z, the decoder then generates an output sequence (y1,…,ym) of symbols one element at a time. At each step the model is auto-regressive [10], consuming the previously generated symbols as additional input when generating the next.

The Transformer follows this overall architecture using stacked self-attention and point-wise, fully connected layers for both the encoder and decoder, shown in the left and right halves of Figure 1, respectively.“

The architecture is illustrated in the diagram on the right.

“Attention is All You Need” (Ashish Vaswani, et al., 2017, P. 2 &,3)

Tough to digest, isn’t it? As you continue reading the paper, you’ll notice the authors have packed a significant amount of information into just 15 pages. We won’t attempt to unpack the entire paper – after all, decompressing something into an even smaller space isn’t logically or physically feasible. Instead, we’ll focus on explaining how the model works and why it’s considered innovative, using an analogy to make it easier to grasp.

Transformer Architecture Analogy: The Smart Reader

Imagine you’re reading the sentence: “The Sky Is The Limit With AI + Innovation.”

A traditional reader (like an RNN) would read it word by word, processing one word at a time before moving to the next. But a Transformer model reads it all at once, identifying key relationships instantly. Here’s how:

Self-Attention

Instead of just moving from “The” → “Sky” → “Is” → “The” →” Limit”, the Transformer looks at all words at once and figures out their importance. It recognizes that:

- “Sky” and “Limit” are closely related.

- “AI + Innovation” adds extra meaning to what is limitless.

- “The Sky Is The Limit” is a phrase, so its meaning should be kept intact.

A traditional model might struggle with these connections, but a Transformer instantly understands how words relate—even if they’re far apart.

Multi-Head Attention

The Transformer doesn’t just look at meaning from one angle – it processes it in multiple ways at the same time:

- One part focuses on the main idea (limitless possibilities).

- Another part connects “AI + Innovation” to breaking limits.

- Another examines word structure to make sure it flows naturally.

This is why Transformers generate more fluent and meaningful text compared to older models.

Positional Encoding

Since the model doesn’t read word by word, it still needs to know the order of words.

- “The Sky” needs to stay before “Is The Limit.”

- “With AI + Innovation” modifies “The Limit,” not “The Sky.”

Positional encoding acts like invisible labels that help the Transformer remember the correct word order without needing to read sequentially.

Feedforward Processing

Once the Transformer processes the relationships, it compiles everything into a clear understanding:

“This sentence means AI and innovation unlock limitless possibilities.”

Through this example we can tell how the Transformer model processes and understands the relationships between words and phrases in a sentence, similar to how a human reader would make connections and derive meaning.

Innovation and Impact: Explained Further

Now let’s go through these advancements brought by the Transformer and connect them to the transformative effects on the field of AI.

Attention Mechanism

- Focus on Relevant Information: The attention mechanism allows the model to focus on the most relevant parts of the input sequence when processing each element. This enables it to capture complex relationships and dependencies between words, even those far apart in the sequence.

- Improved Performance: This selective attention leads to better performance on various natural language processing tasks, such as machine translation and text summarization.

Parallel Processing

- Faster Training: Unlike traditional recurrent neural networks (RNNs) that process sequences sequentially, the Transformer can process the entire input sequence in parallel. This significantly speeds up training and makes it more efficient for handling long sequences.

Scalability: - Handling Large Datasets: The Transformer’s architecture is designed to scale well, allowing it to be trained on massive datasets and handle complex tasks. This scalability has been crucial for the development of large language models (LLMs) like GPT-4, Gemini, and LLaMA.

Versatility

- Adaptability to Different Tasks: The Transformer’s architecture can be adapted to various NLP tasks beyond machine translation, including text summarization, question answering, and sentiment analysis.

- General-Purpose Architecture: This versatility suggests that the Transformer could become a general-purpose architecture for AI, applicable to other domains beyond NLP.

Impact on the Field

- Foundation for LLMs: The Transformer has become the foundation for many state-of-the-art LLMs, driving significant progress in NLP and AI.

- New Possibilities: Its innovative approach has opened up new possibilities for AI applications and research, leading to advancements in areas like chatbots, code generation, and drug discovery.

In summary, the Transformer architecture is considered innovative due to its attention mechanism, parallel processing capabilities, scalability and significant impact on the field of AI. It has revolutionized how we approach natural language processing and has paved the way for the development of powerful AI systems that can understand and generate human language with remarkable accuracy and fluency

Leave a Reply

You must be logged in to post a comment.